14 cool things you can do in Google Cloud

Guest post by the amazing Data Engineer, Marquin Smith.

Exporting GA4 data to BigQuery has introduced a whole new audience to the Google Cloud Platform (GCP).

While BigQuery is a fantastic tool for storing and analysing large quantities of data, such as Google Analytics 4 hit-level data, it can achieve some mighty things in data and analytics.

Below are fourteen interesting things to help expand and enhance your data capability within the Google Cloud Platform.

1. Store your GA4 backup data

Most people get started with Google Cloud Platform because it is recommended that they set it up to have a backup of Google Analytics data in a raw format. This is highly recommended as GA4 API, Looker Studio Connector, and the Explorer section only allow you to look back 14 months at most in the free version of GA4 (up to 50 months on GA4 360).

By backing up the GA4 data in the Google Cloud, you not only have access to the data for as long as you want, but you also have access to raw hit-level data, letting you do deeper insights. For example, check out this post on how to get page value from your BigQuery data.

2. Leverage public datasets in big query

Inside BigQuery, Google provides a wide range of publicly available datasets. Google curates and updates these datasets where appropriate.

These can be queried like any other data inside BigQuery.

A few of the most interesting public datasets inside BigQuery include:

3. Create forecasts with BigQuery Machine Learning

Create time series forecasts with SQL in BigQuery to forecast pageviews, visits or other metrics.

Forecasts can be created at any date granularity in which the training data is available, including daily, weekly, or monthly data.

Below is an example of creating a time series model to forecast daily visits.

CREATE MODEL visits_arima_model

OPTIONS (mo ndel_type='Ae RIMA_PLUS',

time_series_timestamp_column='day',

time_series_data_column='visits'

)

AS

SELECT

day,

visits

FROM

daily_visitsMachine learning in BigQuery quickstart.

As a bonus, BigQuery can also be used to create machine learning models other than time series, such as:

4. Bring in other valuable digital data into BQ and combine it with GA4 data

BigQuery doesn't just hold GA4 data. It can also be used to bring in other valuable digital data sources.

Here are some examples of other data sources that can be brought into BigQuery:

5. Load data into BigQuery from Google Sheets... and vice versa

Got valuable data in Google Sheets??? These can be accessed and queried from within BigQuery.

This is useful for reference or lookup tables that business teams need to keep updated. They can update the required data without knowing how to update a BigQuery table.

Alternatively, you can load data from BigQuery into Google Sheets.

6. Connect to a massive range of dashboard and visualisation services

Having clean processed data is only part of the job. Often, there is a need to visualise the data. As an established data warehouse, almost all major dashboard platforms connect to BigQuery.

Including but not limited to:

Tableau

Domo

Connect directly with Python and R to access their respective visualisation libraries.

7. Set up server-side GTM on Cloud Run

Server-side GTM or sGTM allows you to load off a lot of the tagging you used to have to have the client side to the server. This enables you to improve performance but also allows you to have more control over what data is sent to marketing platforms. This is essential if you are working with GDPR limitations to mask personal information like IP addresses.

The setup for this is easy if you already have Google Cloud Platform and are using Cloud Run, as Google Tag Manager has a one-click setup. It creates the server for you (note - it does set it up in the US by default, so you may need to create new servers locally). It also helps with mapping to a custom domain and creating SSL certificates.

8. Use Cloud Firestore to send profit data with GA4 data

Once you have sGTM set up, you can send data to GA4 and other marketing platforms that the end user can't see. This means that you can send profit data and revenue, but your competitors won't be able to scrape it from the page. Google Cloud Platform's Cloud Firestore is a NoSQL, transactional, and scalable database that offers near-real-time write/read and sync operations for data. There is an in-built connection with sGTM that allows for super fast look-ups. If you store the profit of a product in Cloud Firestore with the product SKU you can easily get that data and optimize campaigns based on profits instead of just revenue.

Simo has a great quick-start guide on how to use Cloud Firestore.

9. Run a Python script on a schedule

Sometimes, there is a Python script we would like to run on a regular schedule. Having the Python script run whether or not your laptop is on is a huge benefit, as it allows you to take holidays with a clear mind that everything will continue to tick along.

There are many ways of running a Python script from within GCP. The easiest is running the Python script in a Cloud Function. Video guide

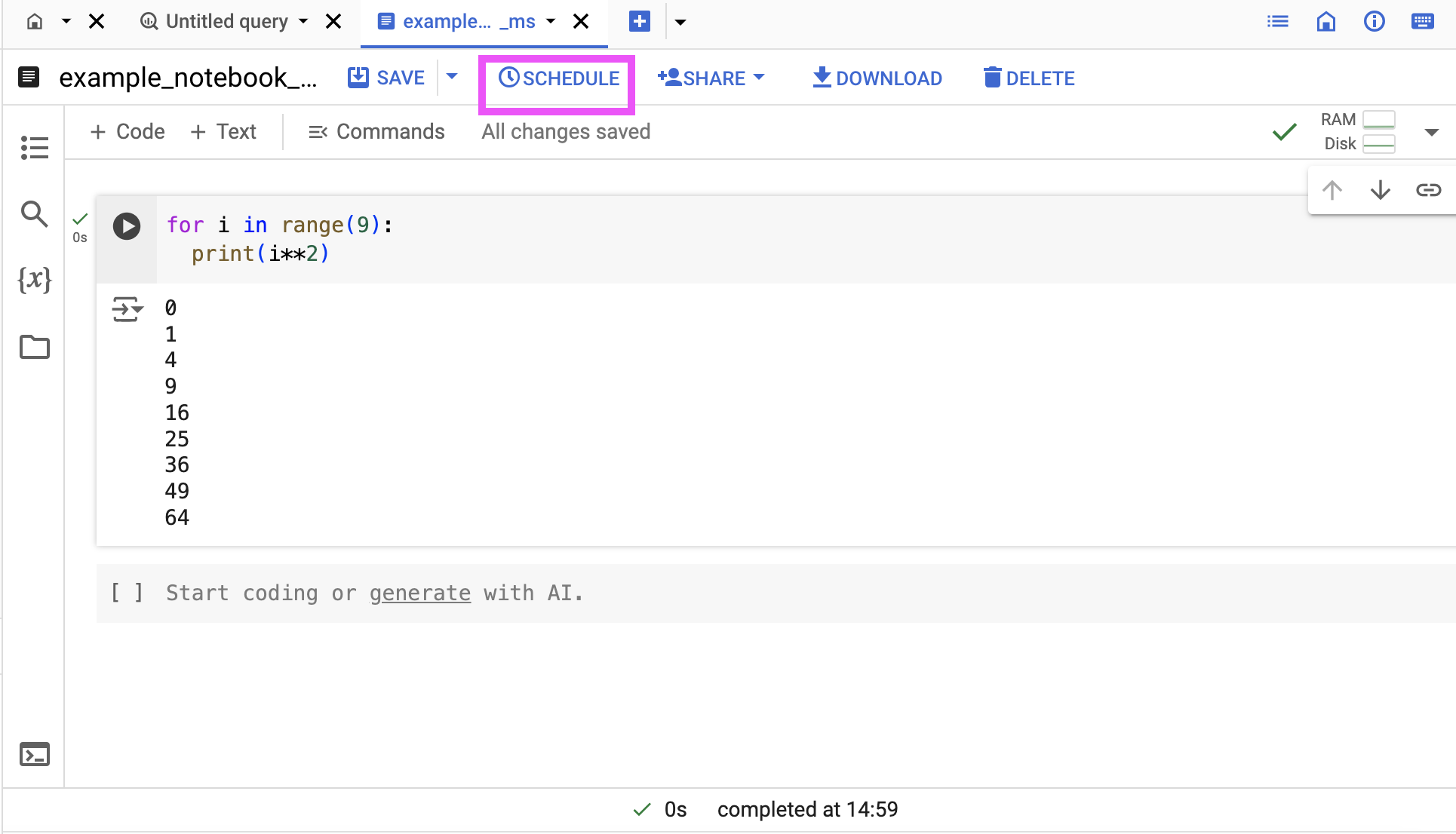

10. Run a Python notebook on a schedule

Jupyter notebooks are a versatile and valuable tool for data professionals.

Google has recently enabled the capability to run notebooks on a schedule as a feature within BigQuery Studio.

11. Run longer scripts in a virtual machine (Compute Engine)

Do you have a script that takes hours or days to run, for example, scraping multiple HTML pages, transforming data and storing it in a warehouse? Or backing up GA4 data you missed because you didn't connect BigQuery sooner. You can run these by creating a virtual machine in Google Cloud's Compute Engine. There is a quick start guide here.

12. Schedule and maintain data pipelines with a managed Airflow service (Cloud Composer)

As data grows within an organisation, typically managing the collection and update processes becomes large and complex.

Airflow allows different tasks to be set up with the necessary dependencies created between them.

Cloud composer is the managed version of Airflow within Google Cloud Platform. This means you don't have to worry about installing or running Airflow, as Google Cloud handles everything for you. This lets you focus on creating the pipelines and tasks to create business value.

13. Model existing data in BigQuery using Dataform.

Dataform is a powerful tool within BigQuery Studio. It is like DBT (Data Build Tool) integrated directly into BigQuery.

What is a DBT or Dataform, though? Well, it allows you to automate processes like:

merging different data sources

transformations on data

monitor dependencies of tables (if I update this table, what other tables will it affect)

monitor data quality

Dataform is entirely cloud-based, meaning you don't need to install anything on your laptop. Best of all, it's free to use. The tables and views it creates fall under BigQuery's pricing model.

14. Get product recommendations from Vertex AI

If you are in retail, up-selling and cross-selling can help increase the average order value. Google Cloud Platform has a Recommendation AI as part of Vertex AI, that leverages the power of Google Search Algorithm to understand what users buy together or upgrade to across all your purchases. Check out the implementation guide here.

Bonus links from other websites

Here are some other sites that have fun ways to explore Google Cloud Platform: